New Year, same old blog. A new style, some broken links and some fixed-broken links. Here’s to yet another WordPress-based blog! Now for some background.

This website has been through a small number of hosts. It started off being hosted on a wordpress.com subdomain. I then migrated it over to a cheapo shared hosting solution, which worked pretty well for the low volume of traffic that it served. As we enter 2019 I am pleased to present the blog from a cheapo VPS, which is fronted by a free CDN.

Why change?

Coming into 2018 there were two aspects that prompted a reconsideration of my hosting:

- I started to take a deeper interest in running my own services over the web protocols I was spending so much time working on.

- The march of browsers towards treating http:// urls as insecure, and by virtue restricting powerful features (Web Platform APIs like Service Worker).

My old shared hosting was starting to feel restrictive in the face of these changes but I was in no rush to change things. Domains are cheap as chips, so I switched focus to a different project https://quic.stream.

quic.stream

The quic.stream domain was a fun name that was going cheap. For this not familiar with the QUIC protocol, it is a UDP-based always secure and multiplexed transport protocol undergoing standardisation in the IETF. It achieves multiplexing within a single QUIC connection by the use of logical streams.

Around the time I purchased the domain, the options for running a QUIC server that could speak to web browsers were pretty limited. The most straightforward way to get things working was to use Caddy server, a Go-based web server that made use of the excellent quic-go library. If you’re interested in trying it out the Wiki has some instructions that may, or may not, work for you.

Word of warning: at the time was based on Google’s earlier QUIC specification. Google Chrome was the only browser that seemed to interop properly. Google continue to experiment and the interop gets broken pretty often. If you visit https://quic.stream and try the connectivity test you are likely to find that QUIC fails, and there is no way for me to detect in JavaScript that this is because the browser outright cobbled it.

Simple-stupid security

Anyway, none of that matters much. What is of more interest is that QUIC’s “always secure” principle matches Caddy’s “Automatic HTTPS on by default” design philosophy. Caddy achieves this by means of Let’s Encrypt, and it does it all behind the scenes without making you waste your time on figuring anything out.

As a technical user I’ve gotten my head around the acronym soup of PKI, CSR, PEM, CRT. However, it all just becomes a PITA for something that is just supposed to be minimal effort or fun on the weekends. In contrast, Caddy and Let’s Encrypt made things so simple-stupid that I was able to do a live demo during a lecture to students at Lancaster University. This took the form of provisioning a new VPS instance (with Caddy pre-installed), creating a new DNS subdomain, and rolling a Hello World config. It took less than 10 minutes.

Tuning web security to the N-th degree

After creating a rough and ready site with Caddy that had great transport security, my attention turned to web security: CSP, CORS, HSTS, SRI etc. I’d never really looked at this before and found it pretty tedious to get right. I appreciate the difficulties in securing the Web Platform in complex User Agents but it sucks to have to rewrite simple button element script calls because of possible injections.

After much effort, quic.stream scores an A+ on the Mozilla Observatory’s HTTP Observatory tests. You can view results at: https://observatory.mozilla.org/analyze/quic.stream

What this exercise taught me is that I benefited from having fine-grain control of the server behaviour. For example, explicitly controlling HTTP headers and using scripts on the server to generate SRI hash values.

Shared hosting rubber gloves

After the success with one site, I took another look at this one. I wanted to secure it using free certificates from Let’s Encrypt, and I wanted to have more control over some of the lower level stuff.

The admin panel of the shared hosting felt like trying to scratch with rubber gloves on. Worse still, they wanted to nickel-and-dime me to pay for certificates.

Migrating the whole site to something else would require effort and time I didn’t have over the summer. So I took a different kind of quick, simple-stupid measure: I signed up for Cloudflare’s free CDN service.

In the shadow of HTTPocalypse, my personal site on shared hosting is feeling neglected. No Let’s Encrypt possible, so testing a move over to @Cloudflare for TLS termination.

— Lucas Pardue (@SimmerVigor) July 25, 2018

This worked really easily and took about 10 minutes. I signed up, enabled 2FA, and followed the instruction to change my nameservers to Cloudflare’s. I got TLS 1.3 termination immediately, which was cool!

However, since my old shared hosting was insecure, I need to enable Cloudflare’s Flexible SSL mode (see this explanation). In essence, although the connection between User Agent and Cloudflare’s edge was secure, the connection to my shared hosting origin was insecure. There was no complete end-to-end security.

Now you might say that the site doesn’t handle much of importance but that doesn’t matter. For a long list of reasons why security is important regardless of content, check out Troy Hunt’s blog post Here’s Why Your Static Website Needs HTTPS.

Although the migration was smooth, I found some issues with mixed-config warnings while using Flexible SSL. In my haste to fix this I got into some weird URL issue that ultimately meant Cloudflare couldn’t load any images from my origin. Rather than waster time pursuing this, I decided the long term solution would be to migrate.

Rolling my own

So I finally found the time to take a look at rolling my own WordPress hosting. On first inspection running a LAMP-like stack using Caddy seemed a bit daunting. And I want PHP for some other future project, so the thought of changing blogging software was out of the picture.

I decided to go with a vanilla LAMP stack. And I was excited to use Apache HTTP Server because I’d heard a few things about the newish mod_md module. In this case md means Managed Domains. The module provides the means for automatic Let’s Encrypt certificate management. The other bonus was that the I’d shared a glass of wine with the author, Stefan Eissing, in the past. (Stefan also developed the mod_h2 module, which provides HTTP/2 support in Apache).

Now, unfortunately I somehow got completely side tracked during the setup phase of all of this. Rather than using mod_md I ended up using certbot.

The reason why is because I was very excited about Let’s Encrypt wildcard certificates when they were announced in March 2018. The benefit of certificates with wildcarded subdomains is that I can reuse the same one across the various experiments I have in mind this year, without having to go through a Let’s Encrypt dance. this is especially helpful for other pieces of software that have no automatic capabilibty in-built.

Flicking through the documentation, it states:

If you want to obtain a wildcard certificate using Let’s Encrypt’s new ACMEv2 server, you’ll also need to use one of Certbot’s DNS plugins.

Since I was a Cloudflare customer on this domain, I could use a Certbot plugin – certbot-dns-cloudflare.

All-in-all the Certbot process wasn’t too bad. I have a certificate and private key usable in a few contexts, and Certbot is responsible for updating it every 90 days.

I would have liked to get mod_md working. However, I was also a bit lazy and relied on my distro’s packaged Apache and my familiarity with the more conventional Apache config directives. It would be good to find out if the module supports what I ended up doing.

Was it worth it?

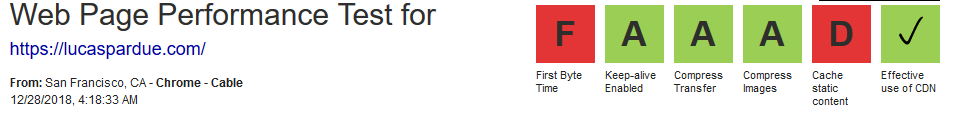

At the most superficial level probably no. I was able to fix broken images but they are pretty pointless anyway.

At a more fundamental level, I’d say the migration was worth it. I have the experimental platform and fine grain control that I wanted, while at the same time providing more robust end-to-end security. Furthermore, the VPS is more performant and has more flexibility to manage scaling. When combined with the capabilities of a CDN, I think this blog has the potential to be a lot more web performance happy. However, WebPageTest marks me down in a few areas, there is still work to do…